Note: This is the original version of a hands-on talk I gave a few months ago at The Alan Turing Institute. It was later turned into a chapter for The Turing Way: A handbook for reproducible data science, so an updated version can be found here.

What is Make?

Make is a build automation tool. It uses a configuration file called a Makefile that contains the rules for what to build. Make builds targets using recipes. Targets can optionally have prerequisites. Prerequisites can be files on your computer or other targets. Make determines what to build based on the directed acyclic graph of the targets and prerequisites. It uses the modification time of prerequisites to update targets only when needed.

Here’s a simple example that compiles some C code:

hello: hello.c

cc -o hello hello.c

In this example:

hellois the targethello.cis the dependencycc -o hello hello.cis the recipe

Collectively, the two lines are called a rule.

When can (or should) I use Make?

Make is great when:

- You repeatedly run a script when some source file changes.

- You have various outputs that are created by various scripts.

- You want to document some command or set of commands that you keep forgetting.

- And much more! See the Closing Remarks section for inspiration.

How to use Make

One of the things that might scare people off from using Make is that when they see an existing Makefile it can seem daunting to understand it and tailor it to their own needs.

In this hands-on part of the tutorial we will iteratively construct a Makefile for a real project. In the Example section below there are a number of Makefiles that I’ve curated over time. Hopefully the experience that you gain from this tutorial should make it easy to adapt them to your specific projects.

Because there are probably enough tutorials on how to use Make to build a software project, this tutorial will show how to use Make for a data analysis pipeline. The idea is to explain different features of Make by iterating through several versions of a Makefile for this project.

We will create a Makefile for a data analysis pipeline. The task is as

follows:

Task: Given two datasets, create a summary report (in pdf) that contains the histograms of these datasets.

(Of course this data science task is kept as simple as possible to explain how to use Make.)

Setting up

To start, clone the base repository:

$ git clone https://github.com/GjjvdBurg/IntroToMake

This basic repository contains all the code that we’ll need in this tutorial:

- data: directory with two datasets that we’re going to analyse

- report: the input directory for the report

- scripts: directory for the analysis script

- output: output directory for the figures and the report

You’ll notice that there are two datasets in the data directory

(input_file_1.csv and input_file_2.csv) and that there is already a

basic Python script in scripts and a basic report LaTeX file in

report. Ensure that you have the matplotlib and numpy packages

installed:

$ pip install [--user] matplotlib numpy

You will also need a working version of pdflatex and, of course,

make.

Makefile no. 1 (The Basics)

Let’s create our first Makefile. Using your favorite editor, create a file

called Makefile with the following contents:

# Makefile for analysis report

output/figure_1.png: data/input_file_1.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i data/input_file_1.csv -o output/figure_1.png

output/figure_2.png: data/input_file_2.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i data/input_file_2.csv -o output/figure_2.png

output/report.pdf: report/report.tex output/figure_1.png output/figure_2.png

cd report/ && pdflatex report.tex && mv report.pdf ../output/report.pdf

To test that everything works correctly, you should now be able to type:

$ make output/report.pdf

If everything works correctly, the two figures will be created and pdf report will be built.

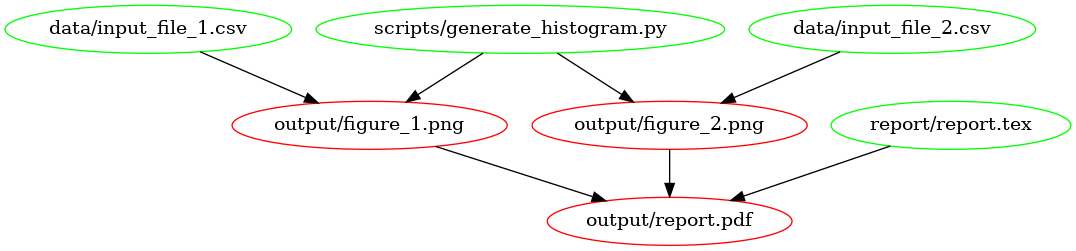

Let’s go through the Makefile in a bit more detail. We have three rules, two

for the figures that are very similar, and one for the report. Let’s look at

the rule for output/figure_1.png first. This rule has the target

output/figure_1.png that has two prerequisites: data/input_file_1.csv

and scripts/generate_histogram.py. By giving the output file these

prerequisites it will be updated if either of these files changes. You’ll

notice that the recipe line calls Python with the script name and uses command

line flags (-i and -o) to mark the input and output of the script.

The rule for the PDF report is very similar, but it has three prerequisites

(the LaTeX source and the figures). Notice that the recipe changes the working

directory before calling LaTeX and also moves the generated PDF to the output

directory. This has to do with some limitations of pdflatex. However, it’s

important to distinguish the above rule from the following:

# don't do this

output/report.pdf: report/report.tex output/figure_1.png output/figure_2.png

cd report/

pdflatex report.tex

mv report.pdf ../output/report.pdf

This rule places the three commands on separate lines. However, Make executes each line independently in separate subshells, so changing the working directory in the first line has no effect on the second, and a failure in the second line won’t stop the third line from being executed. Therefore, we combine the three commands in a single recipe above.

This is what the DAG looks like for this Makefile:

Makefile no. 2 (all and clean)

In our first Makefile we have the basic rules in place. We could stick with this if we wanted to, but there are a few improvements we can make:

- We now have to explicitly call

make output/report.pdfif we want to make the report. - We have no way to clean up and start fresh.

Let’s remedy this by adding two new targets: all and clean. Again,

open your editor and change the contents to add the all and clean

rules:

# Makefile for analysis report

all: output/report.pdf

output/figure_1.png: data/input_file_1.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i data/input_file_1.csv -o output/figure_1.png

output/figure_2.png: data/input_file_2.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i data/input_file_2.csv -o output/figure_2.png

output/report.pdf: report/report.tex output/figure_1.png output/figure_2.png

cd report/ && pdflatex report.tex && mv report.pdf ../output/report.pdf

clean:

rm -f output/report.pdf

rm -f output/figure_*.png

Note that we’ve added the all target to the top of the file. This is

because Make executes the first target when no explicit target is given. So

you can now type make on the command line and it would do the same as

make all. Also, note that we’ve only added the report as the prerequisite

of all because that’s our desired output and the other rules help to build

that output. If you’d have multiple outputs, you could add these as further

prerequisites to the all target.

The clean rule is typically at the bottom, but that’s more style than

requirement. Note that we use the -f flag to rm to stop it complaining

when there is no file to remove.

You can try out the new Makefile by running:

$ make clean

$ make

Makefile no. 3 (Phony Targets)

Typically, all and clean are defined as so-called Phony

Targets.

These are targets that don’t actually create an output file. Such targets will

always be run if they come up in a dependency, but will no longer be run if a

directory/file is created that is called all or clean. We therefore

add a line at the top of the Makefile so that it looks like this:

# Makefile for analysis report

.PHONY: all clean

all: output/report.pdf

output/figure_1.png: data/input_file_1.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i data/input_file_1.csv -o output/figure_1.png

output/figure_2.png: data/input_file_2.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i data/input_file_2.csv -o output/figure_2.png

output/report.pdf: report/report.tex output/figure_1.png output/figure_2.png

cd report/ && pdflatex report.tex && mv report.pdf ../output/report.pdf

clean:

rm -f output/report.pdf

rm -f output/figure_*.pdf

Phony targets are also useful when you want to use Make recursively. In that

case you would specify the subdirectories as phony targets. You can read more

about phony targets in the

documentation,

but for now it’s enough to know that all and clean are typically

declared as phony.

Another target that’s typically phony

is test, in case you have a directory of tests called test and want to

have a target to run them that’s also called test.

Makefile no. 4 (Automatic Variables and Pattern Rules)

There’s nothing wrong with the Makefile we have until now, but it’s somewhat verbose because we’ve declared all the targets explicitly using separate rules. We can simplify this by using Automatic Variables and Pattern Rules.

Automatic Variables. With automatic variables we can use the names of the prerequisites and targets in the recipe. Here’s how we would do that for the figure rules:

# Makefile for analysis report

.PHONY: all clean

all: output/report.pdf

output/figure_1.png: data/input_file_1.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i $< -o $@

output/figure_2.png: data/input_file_2.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i $< -o $@

output/report.pdf: report/report.tex output/figure_1.png output/figure_2.png

cd report/ && pdflatex report.tex && mv report.pdf ../output/report.pdf

clean:

rm -f output/report.pdf

rm -f output/figure_*.pdf

We’ve replaced the input and output filenames in the recipes respectively by

$<, which denotes the first prerequisite and $@ which denotes the

target. There are more automatic variables that you could use, see the

documentation.

Pattern Rules. Notice that the figure recipes have become identical!

Because we don’t like to repeat ourselves, we can combine the two rules into a

single one by using pattern rules. Pattern rules allow you to use the %

symbol as a wildcard and combine the two rules into one:

# Makefile for analysis report

.PHONY: all clean

all: output/report.pdf

output/figure_%.png: data/input_file_%.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i $< -o $@

output/report.pdf: report/report.tex output/figure_1.png output/figure_2.png

cd report/ && pdflatex report.tex && mv report.pdf ../output/report.pdf

clean:

rm -f output/report.pdf

rm -f output/figure_*.pdf

The % symbol is now a wildcard that (in our case) takes the value 1 or

2 based on the input files in the data directory. You can check that

everything still works by running make clean followed by make.

Makefile no. 5 (Wildcards and Path Substitution)

When Makefiles get more complex, you may want to use more “bash-like” features such as building outputs for all the files in an input directory. While pattern rules get you a long way, Make also has features for wildcards and string/path manipulation for when pattern rules are insufficient.

While before our input files were numbered, we’ll now switch to a scenario

where they have more meaningful names. Let’s switch over to the big_data

branch:

$ git stash # stash the state of your working directory

$ git checkout big_data # checkout the big_data branch

If you inspect the data directory, you’ll notice that there are now additional input files that are named more meaningfully (the data are IMBD movie ratings by genre). Also, the report.tex file has been updated to work with the expected figures.

We’ll adapt our Makefile to create a figure in the output directory called

histogram_{genre}.png for each {genre}.csv file, while excluding the

input_file_{N}.csv files.

If we were to remove

the input_file_{N}.csv files, pattern rules would be sufficient here. This

highlights that sometimes your directory structure and Makefile should be

developed hand in hand.

Before changing the Makefile, run

$ make clean

to remove the output files.

First, we’ll create a variable that lists all the CSV files in the data

directory and one that lists only the old input_file_{N}.csv files:

ALL_CSV = $(wildcard data/*.csv)

INPUT_CSV = $(wildcard data/input_file_*.csv)

A code convention for Makefiles is to use full caps for variable names and define them at the top of the file.

Next, we’ll list just the data that we’re interested in by filtering out the

INPUT_CSV from ALL_CSV:

DATA = $(filter-out $(INPUT_CSV),$(ALL_CSV))

This line uses the

filter-out

command to remove items in the INPUT_CSV variable from the ALL_CSV

variable. Note that we use both the $( ... ) syntax for functions and

variables (the ${ ... } syntax also works). Finally, we’ll use the

DATA variable to create a FIGURES variable with the desired output:

FIGURES = $(patsubst data/%.csv,output/figure_%.png,$(DATA))

Here we’ve used the

patsubst

function to transform the input in the DATA variable (that follows the

data/{genre}.csv pattern) to the desired output filenames (using the

output/figure_{genre}.png pattern).

Now we can use these variables for the figure generation rule as follows:

$(FIGURES): output/figure_%.png: data/%.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i $< -o $@

Note that this rule again applies a pattern: it takes a list of targets

(FIGURES) that all follow a given pattern (output/figure_%.png) and

based on that creates a prerequisite (data/%.csv). Such a pattern rule is

slightly different from the one we saw before because it uses two :

symbols. It is called a static pattern

rule.

Of course we also have to update the report.pdf rule:

output/report.pdf: report/report.tex $(FIGURES)

cd report/ && pdflatex report.tex && mv report.pdf ../$@

and the clean rule:

clean:

rm -f output/report.pdf

rm -f $(FIGURES)

The resulting Makefile should now look like this:

# Makefile for analysis report

#

ALL_CSV = $(wildcard data/*.csv)

INPUT_CSV = $(wildcard data/input_file_*.csv)

DATA = $(filter-out $(INPUT_CSV),$(ALL_CSV))

FIGURES = $(patsubst data/%.csv,output/figure_%.png,$(DATA))

.PHONY: all clean

all: output/report.pdf

$(FIGURES): output/figure_%.png: data/%.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i $< -o $@

output/report.pdf: report/report.tex $(FIGURES)

cd report/ && pdflatex report.tex && mv report.pdf ../$@

clean:

rm -f output/report.pdf

rm -f $(FIGURES)

If you run this Makefile, it will need to build 28 figures. You may want to

use the -j flag to make to build these targets in parallel!

$ make -j 4

Makefile no. 6 (Advanced: Generating Rules using Call)

In a data science pipeline, it may be quite common to apply multiple scripts to the same data (for instance when you’re comparing classifiers or testing different parameters). In that case, it can become tedious to write a separate rule for each script when only the script name changes. To simplify this process, we can let Make expand a so-called canned recipe.

To follow along, switch to the canned branch:

$ make clean

$ git stash --all # note the '--all' flag so we also stash the Makefile

$ git checkout canned

On this branch you’ll notice that there is a new script in the scripts

directory called generate_qqplot.py. This script works similarly to the

generate_histogram.py script (it has the same command line syntax), but it

generates a QQ-plot. The

report.tex file has also been updated to incorporate these plots.

Now, we could simply add another rule that generates the QQ-plot figures, so that the Makefile becomes:

# Makefile for analysis report

#

ALL_CSV = $(wildcard data/*.csv)

DATA = $(filter-out $(wildcard data/input_file_*.csv),$(ALL_CSV))

HISTOGRAMS = $(patsubst data/%.csv,output/figure_%.png,$(DATA))

QQPLOTS = $(patsubst data/%.csv,output/qqplot_%.png,$(DATA))

.PHONY: all clean

all: output/report.pdf

$(HISTOGRAMS): output/histogram_%.png: data/%.csv scripts/generate_histogram.py

python scripts/generate_histogram.py -i $< -o $@

$(QQPLOTS): output/qqplot_%.png: data/%.csv scripts/generate_qqplot.py

python scripts/generate_qqplot.py -i $< -o $@

output/report.pdf: report/report.tex $(FIGURES)

cd report/ && pdflatex report.tex && mv report.pdf ../$@

clean:

rm -f output/report.pdf

rm -f $(HISTOGRAMS) $(QQPLOTS)

(which is what is currently in your repo.)

But as the number of scripts that you want to run on your data grows, this may lead to a large number of rules in the Makefile that are almost exactly the same. We can simplify this by creating a canned recipe (which is like a function) that takes both the name of the script and the name of the genre as input:

define run-script-on-data

output/$(1)_$(2).png: data/$(2).csv scripts/generate_$(1).py

python scripts/generate_$(1).py -i $$< -o $$@

endef

Note that in this recipe we use $(1) for either histogram or

qqplot and $(2) for the genre. These correspond to the expected

function arguments to the run-script-on-data canned recipe. Also, notice

that we use $$< and $$@ in the actual recipe, with two $ symbols

for escaping. To actually create all the targets, we need a line that calls

this canned recipe. In our case, we use a double for loop over the genres and

the scripts:

$(foreach genre,$(GENRES),\

$(foreach script,$(SCRIPTS),\

$(eval $(call run-script-on-data,$(script),$(genre))) \

) \

)

The full Makefile then becomes:

# Makefile for analysis report

#

ALL_CSV = $(wildcard data/*.csv)

DATA = $(filter-out $(wildcard data/input_file_*.csv),$(ALL_CSV))

HISTOGRAMS = $(patsubst %,output/histogram_%.png,$(GENRES))

QQPLOTS = $(patsubst %,output/qqplot_%.png,$(GENRES))

GENRES = $(patsubst data/%.csv,%,$(DATA))

SCRIPTS = histogram qqplot

.PHONY: all clean

all: output/report.pdf

define run-script-on-data

output/$(1)_$(2).png: data/$(2).csv scripts/generate_$(1).py

python scripts/generate_$(1).py -i $$< -o $$@

endef

$(foreach genre,$(GENRES),\

$(foreach script,$(SCRIPTS),\

$(eval $(call run-script-on-data,$(script),$(genre)))\

)\

)

output/report.pdf: report/report.tex $(HISTOGRAMS) $(QQPLOTS)

cd report/ && pdflatex report.tex && mv report.pdf ../$@

clean:

rm -f output/report.pdf

rm -f $(HISTOGRAMS) $(QQPLOTS)

Note that we’ve added a SCRIPTS variable that holds the histogram and

qqplot names. If we were to add another script that follows the same

pattern as these two, we would only need to add it to the SCRIPTS

variable.

To build all of this, run

$ make -j 4

Debugging Makefiles

Before wrapping up I wanted to briefly mention two commands that are very

useful when writing Makefiles with complex variables: info and error.

With the info command you can print the current value of a variable to

stdout:

$(info $$DATA = ${DATA})

will print DATA = ... with ... the content of the DATA variable.

The error command stops execution at that point in the Makefile. This is

useful in combination with the info command when you want to just print

the variables and not run any further:

$(info $$DATA = ${DATA}) # will print the content of DATA

$(error) # will exit Make at this point

And of course, you can run make -d to see the debug output. This can help

you figure out where a rule fails or a syntax error occurs.

Closing Remarks

I hope this tutorial has given you some insight in how to use Make for your projects. Of course it isn’t feasible to expand on all the features of Make in such a short space, but I think I’ve covered the most important ones. A few things that may be relevant that I haven’t covered:

- Using @ to suppress echoing

- Specifying order in prerequisites

- Special targets other than

PHONY.

For instance

.POSIX:stops execution of a recipe upon the first failure, andPRECIOUS:allows you to specify targets that should not be deleted when Make is interrupted.

If you want to explore other uses of Make, I have collected some links to Makefiles that I use for various other projects:

- LaTeX project with a separate figure directory. This is an example of using Make recursively, which has some caveats (see Further Reading).

- A C project with unit tests, coverage, and documentation

- Python

package.

This Makefile is self-documenting: the default goal is

help, which prints a brief description of each target to the command line. - R package. Not mine but I’ve used something like this for several R packages.

- Real-world reproducible research

paper.

Some self-promotion! This Makefile generates all the empirical results for a

paper (including constants, figures, and tables) from the raw input data,

resulting in reproducibility through a single

makeinvocation!

Thanks for your attention!

Further reading

Some links I’ve collected while writing this.

Sites

- Recursive Make Considered Harmful. This is a well-known paper on why you shouldn’t use nested makefiles. To summarise: if you do this Make can’t see the entire DAG and that leads to problems.

- Non-Recursive Make Considered Harmful: This is a research paper describing the failings of Make for large and complex builds.

- Discussion on HN on Make.

Tools

- Plot the DAG of the Makefile with makefile2graph.

- vim-background-make

Alternatives to Make

I’m not explicitly recommending any of these, but if you want features that Make doesn’t support or have a really big project with complex dependencies, then here are some alternatives. I would recommend not to optimise your build system until you run into a problem with your current one. Optimising your build system might lead you down to a rabbit hole that ends up taking more time than slow builds!

- Bazel: An open-source version of Google’s Blaze build system.

- Buck: Facebook’s build system.

- Tup: a fast build system that processes prerequisites bottom-up instead of Make’s top-down. The speed looks impressive and the paper describing it is interesting. Would have been great if it could be run on Makefiles too instead of requiring a Tupfile with different syntax.

- Walk: Make alternative that claims to be more flexible than Make because it doesn’t just rely on modification time. Makefile is now essentially a bash script.

Appendix

Directed Acyclic Graph

A Directed Acyclic Graph (DAG) is a graph of nodes and edges that is:

- directed: edges have a direction and you can only walk the graph in that direction

- acyclic: does not contain cycles: A can’t depend on B when B depends on A.

The latter property is of course quite handy for a build system. More on DAGs on Wikipedia.

Installing Make

First check if you have GNU Make installed already. In a terminal type:

$ make

If you get make: command not found (or similar), you don’t have make. If

you get make: *** No targets specified and no makefile found. Stop. you

do have make.

We’ll be using GNU Make in this tutorial. Verify that this is what you have by typing:

$ make --version

If you don’t have GNU make but have the BSD version, some things might not work as expected.

To install GNU Make, please follow these instructions:

Linux: Use your package manager to install Make. For instance on Arch Linux:

$ pacman -S makeand on Ubuntu:

$ sudo apt-get install build-essentialMacOS: If you have Homebrew, it’s simply:

$ brew install makeIf you have a builtin Make implementation, please ensure that it’s GNU make by checking

make --version.Windows: Cygwin, probably?